Introduction

Definition of Bias and Variance in Machine Learning

In the world of machine learning, understanding the concepts of bias and variance is critical for developing effective models.

- Bias refers to the error due to overly simplistic assumptions in the learning algorithm. When a model has high bias, it tends to miss relevant relations between features and target outputs, leading to underfitting. For example, if you were trying to fit a linear model to data that clearly has a quadratic relationship, the model would perform poorly because it simply can’t capture the complexity of the data.

- Variance, on the other hand, indicates how much the model’s predictions would change if it were trained on a different dataset. A model with high variance pays too much attention to the training data, learning noise along with the signal, resulting in overfitting. An example of this might be a complex decision tree that perfectly classifies the training data but performs poorly on new, unseen data.

Significance of Bias-Variance Tradeoff

The significance of the bias-variance tradeoff lies in its ability to guide practitioners in selecting the right model complexity. Striking a balance between bias and variance is essential for generalizing well on unseen data. Here’s why it matters:

- Optimizing Performance: Understanding this tradeoff helps in minimizing prediction error, leading to models that perform well across varied scenarios.

- Model Selection: Recognizing the tradeoff can guide decisions on whether to simplify a model or increase its complexity.

- Real-World Applications: It plays a pivotal role in practically every application of machine learning, from finance to healthcare, making it an indispensable concept for anyone looking to make impactful predictions.

By grappling with these concepts, one can greatly improve their machine learning models and achieve more accurate results.

Bias-Variance Tradeoff Explained

Understanding Bias

Building on the foundation of concepts like bias and variance, it’s crucial to dive deeper into what bias truly means in machine learning. Bias is often a reflection of the assumptions made by a machine learning model that simplifies the problem to facilitate learning.

- Characteristics of High Bias:

- Models may overlook important relationships.

- Common in algorithms like linear regression when data is nonlinear.

- Example: Imagine trying to predict housing prices using only the size of the house. If you ignore other factors like location, condition, and market trends, your predictions will likely miss the mark.

Understanding Variance

Now, let’s shift gears and talk about variance. Variance measures how sensitive a model is to fluctuations in the training data.

- Characteristics of High Variance:

- Models can highly fit the training data but poorly generalize to new data.

- Common in complex models such as deep decision trees.

- Example: Think of a decision tree that adapts perfectly to every data point in the training set, but when the real world throws somewhat different data at it, it stumbles.

Balancing Bias and Variance

Finding the sweet spot between bias and variance can feel like a balancing act. Here are some tips:

- Start Simple: Begin with a simpler model and gradually increase complexity as needed.

- Use Regularization: Techniques like Lasso or Ridge regression can help control variance.

- Evaluate Performance: Use cross-validation to assess how well the model generalizes.

By understanding and balancing bias with variance, machine learning practitioners can significantly enhance model performance and ultimately achieve more reliable predictions.

Factors Influencing the Bias-Variance Tradeoff

Model Complexity

Now that we’ve established the concepts of bias and variance, let’s explore the factors influencing the bias-variance tradeoff. One of the most significant factors is model complexity.

- Low Complexity: Simple models, such as linear regressions, may result in high bias but lower variance.

- High Complexity: Conversely, complex models like neural networks can adapt to data intricacies but may also capture noise, resulting in high variance.

Imagine choosing a high-tech Ferrari for a smooth road versus a reliable SUV for rough terrain. If the terrain’s too complex, the Ferrari will struggle, much like a complex model struggling with noisy data.

Dataset Size

Another critical factor is the size of your dataset.

- Small Datasets: Limited data can lead to high variance. A model might overly adapt to the few data points available.

- Large Datasets: Bigger datasets generally provide more information, leading to less variance and a more reliable model.

Think of a poker game; the more hands played, the better your understanding of your opponents’ strategies.

Noise in Data

Lastly, we can’t overlook the impact of noise in data.

- High Noise: If data is riddled with inaccuracies or irrelevant information, it can cause high variance, as models learn from these misleading signals.

- Low Noise: Cleaner, more relevant data helps models maintain their focus, leading to a better balance between bias and variance.

Just like trying to hear someone in a crowded room, the less noise there is, the clearer the communication becomes. By assessing these factors, practitioners can effectively navigate the bias-variance tradeoff and enhance model performance.

Methods to Handle Bias-Variance Tradeoff

Cross-Validation

Having explored the factors influencing the bias-variance tradeoff, let’s delve into effective methods to manage it. One widely-used approach is cross-validation. This technique helps to assess a model’s performance and ensure it generalizes well to unseen data.

- What It Is: Cross-validation involves partitioning the dataset into several segments; training occurs on some segments while validating on others.

- Benefits: This process helps in identifying if a model is overfitting or underfitting.

For instance, using K-Fold cross-validation allows you to create multiple training and validation sets, which can provide a more robust measure of model performance. It’s like preparing dishes for a cooking competition. You want feedback from several judges rather than just one!

Regularization Techniques

Another method to control the bias-variance tradeoff is through regularization techniques.

- Lasso and Ridge Regression: These techniques add a penalty term to the loss function, discouraging overly complex models.

- Benefits: Regularization helps to keep the model flexible enough to learn from the data while avoiding excessive fitting.

Think of it as putting a speed limit on a race car; it gives you control to ensure the car doesn’t crash due to unrestrained speed, maintaining a balance of performance and safety.

Ensemble Learning Approaches

Finally, ensemble learning approaches are powerful methods to improve model performance by combining multiple models.

- Bagging and Boosting: Techniques like Random Forest (bagging) and AdaBoost (boosting) work by aggregating predictions from multiple models, minimizing both bias and variance.

- Benefits: This often leads to more accurate and stable predictions.

Imagine a sports team where each player excels in a specific skill. Together, they can outperform a single star player by playing to their strengths. By applying these methods—cross-validation, regularization, and ensemble learning—data scientists can navigate the complexities of the bias-variance tradeoff, leading to enhanced model performance and reliability.

Practical Examples and Case Studies

Application of Bias-Variance Tradeoff in Regression

Now that we’ve explored effective methods for managing the bias-variance tradeoff, let’s look at practical applications in real-world scenarios. Starting with regression, consider predicting housing prices based on various features like location, size, and amenities.

- High Bias Example: If a linear regression model is used, it might miss nonlinear relationships, resulting in consistently inaccurate predictions. This represents high bias as it oversimplifies complex patterns in the data.

- High Variance Example: On the other hand, a highly complex polynomial regression might perfectly fit the training data. However, it can struggle with new listings, demonstrating high variance.

Thus, employing techniques like cross-validation helps in selecting the right model complexity, ensuring predictions are both accurate and generalizable.

Application of Bias-Variance Tradeoff in Classification

When it comes to classification, the bias-variance tradeoff can be illustrated through a common example: email spam detection.

- High Bias Scenario: A simple threshold-based model may incorrectly classify numerous relevant emails as spam (false positives), representing underfitting.

- High Variance Scenario: Meanwhile, a complex model that varies greatly based on the inbound emails might incorrectly label legitimate emails as spam because it overfits to tiny fluctuations in the data.

By carefully analyzing the tradeoff in both regression and classification problems, data practitioners ensure that their models maintain strong generalization capabilities, leading to improved accuracy and efficiency in predictions.

Evaluating Model Performance with Bias-Variance Tradeoff

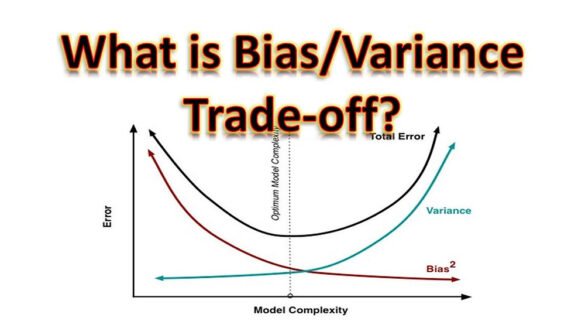

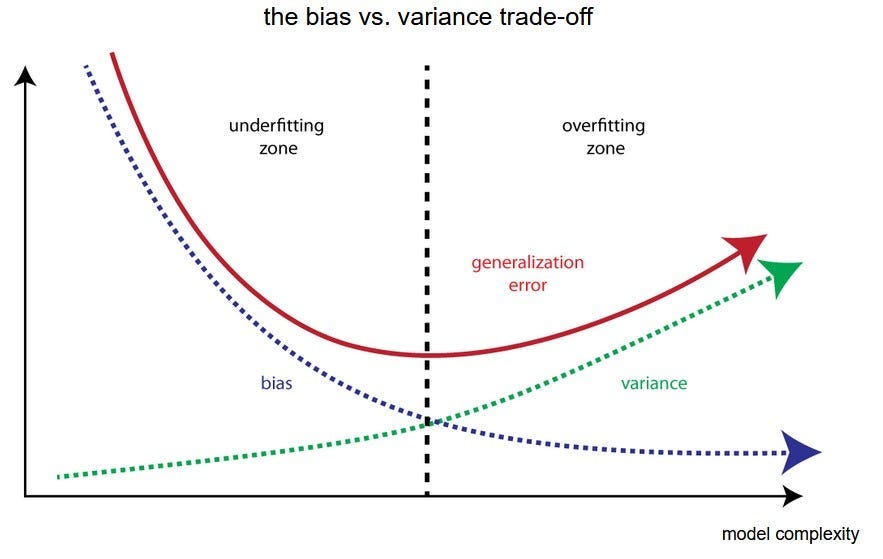

Bias-Variance Tradeoff Graph

As we evaluate model performance, visual tools like the bias-variance tradeoff graph are invaluable. This graph typically plots three key elements: training error, validation error, and the total prediction error.

- Training Error: This decreases as model complexity increases but can become misleading if it drops too low, suggesting potential overfitting.

- Validation Error: Initially, validation error decreases with model complexity. However, after a certain point, it begins to rise, illustrating the impact of high variance.

- Total Error: The sweet spot we aim for is where the total error is minimized, typically somewhere between high bias and high variance.

Visualizing these errors side by side can be an eye-opener for machine learning practitioners. It’s like looking at a road map—knowing where you’re starting and where you might want to head to avoid trouble.

Impact on Prediction Error

Ultimately, understanding the bias-variance tradeoff has a profound impact on prediction error. By clearly recognizing the balance:

- High Bias: Leads to systematic errors, causing the model to consistently underestimate or overestimate.

- High Variance: Causes fluctuations in predictions based on small changes in the input data, resulting in inconsistent outputs.

For example, think about predicting daily temperatures. A model with high bias may predict every day as an average temperature, while a high-variance model reacts drastically to minor weather changes. By refining our understanding of bias and variance, and utilizing this framework effectively, we can significantly enhance the reliability and accuracy of our machine learning models.

Challenges and Misconceptions

Common Pitfalls in Managing Bias and Variance

Navigating the bias-variance tradeoff can be quite a journey, but it’s important to be aware of some common pitfalls along the way.

- Overcomplicating Models: One major mistake practitioners often make is opting for overly complex models too early in the process, leading to high variance. It’s akin to bringing a high-powered telescope to a backyard stargazing party—you may end up seeing every little detail but miss the beauty of the entire sky.

- Ignoring Validation Data: Another pitfall is neglecting to regularly validate models against unseen data. This can create an illusion of performance, masking real-world challenges.

- Focusing Solely on Performance Metrics: Relying too heavily on a single metric for success may obscure the bigger picture, leading to a tradeoff imbalance.

Addressing Misunderstandings in Bias-Variance Tradeoff

To effectively manage bias and variance, addressing misunderstandings is crucial.

- Bias vs. Variation: A common misconception is relating bias solely to flawed models and variance only to complexity. In reality, they exist along a spectrum and require nuanced understanding.

- The Role of Data: Many assume that increasing data will eliminate variance entirely, but high-quality data is just as significant. Garbage in, garbage out still holds true!

By recognizing these challenges and misconceptions, data practitioners can more effectively tackle the bias-variance tradeoff, leading to improved models and outcomes. Understanding the intricacies of this concept enables a more informed, strategic approach to developing reliable and high-performing machine learning systems.

Advanced Topics and Future Trends

Bayesian Approaches to Bias-Variance Tradeoff

As we delve into more advanced topics surrounding the bias-variance tradeoff, one intriguing approach is the Bayesian perspective. Bayesian methods provide a framework that allows us to incorporate prior knowledge into the modeling process, which can help in balancing bias and variance more effectively.

- Uncertainty Quantification: Bayesian models can quantify uncertainty, allowing practitioners to understand the confidence in predictions. This helps in making informed decisions, especially in high-stakes environments like healthcare.

- Regularization via Priors: By using priors, Bayesian methods naturally avoid overfitting, allowing for fewer model complexities while managing variance. It’s akin to bringing a trusted guide on a hike; their insights help navigate the twists and turns more securely.

Deep Learning Implications on Bias and Variance

Shifting gears to deep learning, this rapidly evolving field introduces unique implications for the bias-variance tradeoff.

- High Capacity Models: Deep learning models, with their many layers, can capture complex patterns but are susceptible to high variance if not managed correctly.

- Regularization Techniques: Techniques like dropout and batch normalization are essential for controlling variance in deep learning. It’s like playing a game of basketball; constant practice helps refine your technique and maintain a balanced performance.

By embracing these advanced approaches and understanding future trends, machine learning practitioners can more effectively navigate the land of bias and variance, setting the stage for innovative solutions and robust models in the evolving landscape of artificial intelligence.

Conclusion

Key Takeaways on Bias-Variance Tradeoff

As we wrap up our exploration of the bias-variance tradeoff, several key takeaways stand out. Understanding this concept is fundamental for developing effective machine learning models.

- Balance is Essential: Striking the right balance between bias and variance is crucial for ensuring robust model performance. A thoughtful approach to model complexity can lead to better generalization.

- Utilize Techniques: Leveraging methods like cross-validation, regularization, and ensemble learning can significantly help in managing the tradeoff.

- Practical Applications: Awareness of bias and variance impacts both regression and classification tasks, aiding practitioners in making informed decisions.

Remember, the bias-variance tradeoff isn’t just a theoretical concept; it’s a practical toolkit for enhancing model performance.

Continued Learning in Machine Learning Bias and Variance Tradeoff

The journey of learning doesn’t stop here. As the field of machine learning evolves, staying updated on the latest trends and techniques related to bias and variance is vital.

- Engage with Communities: Participating in forums and attending workshops can provide new insights and practical strategies.

- Experiment and Iterate: With every model you build, take the time to experiment with bias-variance management techniques.

By fostering a mindset of continuous learning, practitioners can sharpen their skills and keep pace with the ever-changing landscape of machine learning, ultimately leading to more accurate and reliable models.