Introduction

Definition of Interpretability in Machine Learning

Interpretability in machine learning (ML) refers to the ability to understand and explain the decisions taken by ML models. It’s not just about how well a model predicts outcomes but also about whether stakeholders can grasp why those predictions were made. Think of it as the difference between a black box and a clear glass box—knowing how the data flows through the model can demystify the process.

Significance of Interpretability in the ML Context

The importance of interpretability in machine learning models is paramount for several reasons:

- Building Trust: Users are more likely to embrace AI solutions when they can see the rationale behind decisions.

- Making Informed Choices: Organizations can make strategic decisions based on transparent insights.

- Mitigating Risks: Understanding model behavior can help identify potential biases or inaccuracies, leading to more ethical outcomes.

By prioritizing interpretability, we encourage responsible AI development, paving the way for innovative applications across various sectors.

Importance of Interpretability

Enhancing Trust and Confidence

One of the primary reasons for focusing on the importance of interpretability is the enhancement of trust and confidence among users. When individuals or organizations can understand how an ML model arrives at its decisions, they are more likely to rely on it. Consider a healthcare scenario where a model predicts patient outcomes; transparency fosters belief in the system, potentially leading to better patient care.

Facilitating Regulatory Compliance

In industries like finance and healthcare, regulatory guidelines demand transparency. Interpretability not only helps models meet these requirements but also enables businesses to demonstrate accountability. This is crucial because:

- Regulatory bodies often require explanations for automated decisions.

- Failure to comply can result in hefty fines and reputational damage.

Promoting Ethical AI Practices

Interpretability also plays a vital role in promoting ethical AI practices. By providing insights into model behavior, organizations can detect biases and inappropriate decision-making processes. Ensuring fairness and accountability in AI solutions is not just a trend; it’s a responsibility that tech companies must embrace to foster a equitable digital future. This adherence to ethical standards ultimately enhances the credibility of AI technologies.

Techniques for Enhancing Interpretability

Feature Importance Analysis

To further explore the importance of interpretability in machine learning, various techniques can be employed. One effective approach is feature importance analysis. This technique helps determine which features most significantly influence a model’s predictions. Imagine you’re looking at a house pricing model; understanding factors like location, square footage, and amenities allows stakeholders to make sense of the value estimates.

Local Explanations

Next, local explanations provide insights into how specific inputs lead to particular outputs. This method can clarify individual predictions in contexts like credit scoring or loan approvals. For example, tools like LIME (Local Interpretable Model-agnostic Explanations) can show which features impacted a single instance, empowering users to understand the nuances of decisions at a granular level.

Model-Agnostic Methods

Finally, model-agnostic methods are powerful because they can be applied to any model, regardless of its complexity. Techniques such as SHAP (SHapley Additive exPlanations) provide consistent interpretations, making them valuable for anyone working with diverse machine learning frameworks. By utilizing these techniques, organizations can bolster trust, paved with clarity and understanding, ensuring the responsible use of AI in decision-making processes.

Applications of Interpretable ML Models

Healthcare Industry

Building on the techniques that enhance interpretability, the applications of interpretable machine learning models are vast and impactful. In the healthcare industry, these models can vastly improve patient outcomes. For instance, predictive models that identify patients at risk of developing chronic conditions can provide healthcare professionals with clear insights, allowing for timely intervention. Clinicians appreciate being able to understand why a model suggests certain treatments, which enhances their confidence in using AI tools.

Finance Sector

Similarly, in the finance sector, interpretable models are crucial for risk assessment and loan approvals. Banks are leveraging machine learning to evaluate credit scores, but it’s essential to explain why someone was approved or denied. This not only aids in regulatory compliance but also builds trust with customers who deserve transparency in financial decisions.

Judicial Decisions

Lastly, the application of interpretable ML models in judicial decisions can be transformative. For example, risk assessment tools used in sentencing can generate insights that highlight the reasoning behind recommendations. This transparency ensures that legal professionals can justify their choices, ultimately fostering fairness and accountability in the justice system. By harnessing interpretable models across these sectors, organizations can drive impactful changes while maintaining ethical standards and public trust.

Challenges and Limitations

Trade-offs with Model Performance

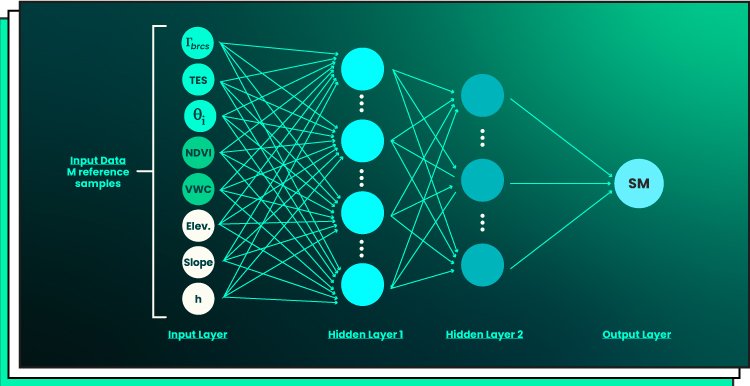

Despite the promising applications of interpretable machine learning models, there are significant challenges and limitations. One major hurdle is the trade-off between interpretability and model performance. Often, simpler models, which are easier to interpret, may not achieve the same predictive accuracy as more complex algorithms. For instance, while a linear regression might clearly show the impact of each variable on a target outcome, a deep learning model may provide superior predictions but lacks straightforward interpretability.

Complexity of Data

Additionally, the complexity of data can pose challenges. Real-world data is often messy and multifaceted, making it difficult to extract clear insights. In healthcare, for example, patient histories may include numerous variables, and distilling these down to interpretable features requires careful consideration and domain expertise.

Human-Centric Interpretability

Finally, the concept of human-centric interpretability can differ from person to person. What makes sense to a data scientist might not be understandable for a non-technical user. This disparity highlights the need for a variety of interpretative tools tailored to different audiences. Navigating these challenges is crucial for the ongoing evolution and trust in interpretable machine learning.

Interpretability in Real-World Scenarios

Case Study 1: Predictive Maintenance

To further illustrate the importance of interpretability, let’s examine real-world scenarios through two compelling case studies. The first involves predictive maintenance in manufacturing. In this context, machine learning models analyze sensor data from equipment to predict failures before they occur. By employing interpretable model techniques, engineers can pinpoint which sensors contribute most to failure predictions. This clarity allows them to prioritize maintenance efforts effectively, minimizing downtime and costs while ensuring safety.

Case Study 2: Credit Risk Assessment

The second case study focuses on credit risk assessment in the finance industry. Lenders use machine learning models to evaluate applicants’ creditworthiness. However, it’s imperative for these models to explain decisions—why an applicant was denied credit, for example. Using interpretable methods, institutions can provide clear reasons tied to specific factors like income, debt-to-income ratio, and credit history. This transparency not only builds customer trust but also helps organizations comply with regulations, creating a more equitable lending process. These case studies exemplify how interpretability is not just a feature, but a necessity in transforming data into actionable insights across industries.

Future Trends and Research Directions

Automated Interpretability Techniques

Looking ahead, one of the most exciting future trends in machine learning interpretability is the development of automated interpretability techniques. These innovations could streamline the process, enabling users to automatically generate clear explanations for model predictions without extensive manual intervention. Imagine a scenario where a healthcare professional receives instant, comprehensible insights alongside predictive analytics, boosting both efficiency and decision-making confidence.

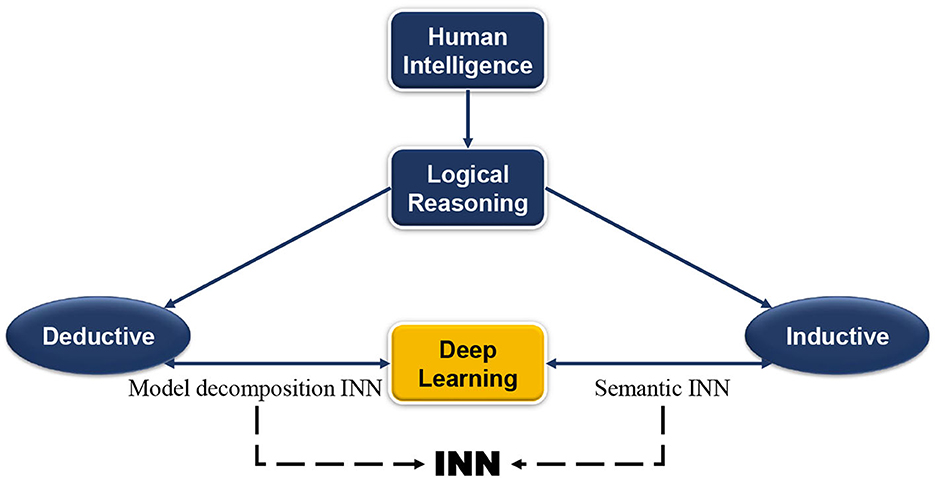

Interdisciplinary Studies in ML Interpretability

Another promising direction is the push for interdisciplinary studies in ML interpretability. By collaborating with experts from fields such as psychology, ethics, and sociology, researchers can enhance our understanding of what constitutes effective explanations for diverse audiences. This holistic approach ensures that interpretability doesn’t become a “one-size-fits-all” solution but is instead tailored to fit the context of use. Bridging these disciplines could lead to richer, more impactful insights, making machine learning systems not just powerful but also deeply responsive to the needs of society.

Conclusion

Recap of the Importance of Interpretability

In summary, the importance of interpretability in machine learning cannot be overstated. As explored throughout this article, clear explanations of model predictions enhance trust, foster accountability, and ensure compliance across various sectors. From healthcare to finance and even judicial systems, being able to explain decisions made by algorithms is crucial for ethical and informed practices.

Call to Action for Embracing Transparent ML Models

Now is the time for practitioners and organizations to embrace transparent machine learning models. By prioritizing interpretability alongside performance, stakeholders can create AI systems that are not only powerful but also trustworthy and responsible. As we move toward a data-driven future, let us commit to advancing machine learning interpretability, ensuring that AI tools empower, rather than confuse, those who rely on them. Join the conversation, advocate for clear explanations, and be part of the movement towards a more transparent AI ecosystem!