Introduction

Overview of Machine Learning in Python

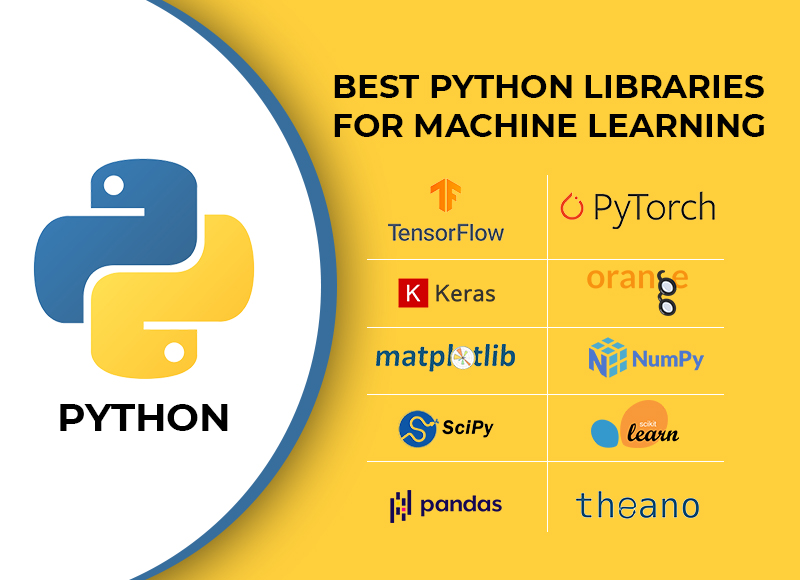

Machine learning has revolutionized how data is analyzed and interpreted across various industries. With the rise of Python as a programming language of choice, practitioners of all skill levels are finding it easier to implement machine learning solutions. Python’s readable syntax and extensive community support make it an excellent entry point for aspiring data scientists.

For instance, a beginner might start with simple algorithms like linear regression and gradually move on to complex models such as neural networks. The beauty of Python lies in its accessibility, encouraging experimentation and innovation.

Importance of Python Libraries in Machine Learning

The real power of Python in machine learning comes from its robust library ecosystem. These libraries provide pre-built functions and frameworks, enabling rapid development and experimentation. Some key benefits include:

- Efficiency: Libraries like NumPy and Pandas streamline data manipulation and analysis.

- Versatility: Frameworks such as TensorFlow and Keras allow for deep learning model creation with minimal coding.

- Community Support: A vast community means tutorials, documentation, and forums are readily available, easing problem-solving.

With a strong foundation in these Python libraries, mastering machine learning becomes an exciting journey rather than a daunting task.

NumPy Library

Introduction to NumPy

When it comes to data manipulation in Python, the NumPy library stands at the forefront. Designed specifically for scientific computing, it offers powerful array structures that can efficiently store and process large datasets. Having used NumPy in various projects, the speed and ease it brings to data handling truly stand out.

For many beginners, grasping NumPy can be a game-changer, allowing them to shift from traditional data processing methods to more advanced analytics seamlessly.

Array Creation and Manipulation

Creating arrays in NumPy is straightforward and can be done in multiple ways:

- Using

np.array(): Convert Python lists into arrays. np.zeros()andnp.ones(): Generate arrays filled with zeros or ones, respectively.np.arange()andnp.linspace(): Create evenly spaced values over a specified range.

Manipulating these arrays is just as intuitive, with operations like reshaping, slicing, and indexing readily available.

Mathematical Functions

NumPy also excels in mathematical capabilities, providing a plethora of functions for computations:

- Element-wise operations: Add, subtract, multiply, and divide arrays effortlessly.

- Statistical functions: Calculate means, medians, and standard deviations quickly.

- Linear algebra: Functions for matrix multiplication and solving linear equations make complex operations manageable.

Using NumPy not only enhances efficiency but also makes tackling mathematical problems in data science a breeze.

Pandas Library

Introduction to Pandas

Following the powerful capabilities of NumPy, the Pandas library plays a crucial role in data manipulation and analysis in Python. With its intuitive design, Pandas allows users to handle structured data with ease. When I first encountered Pandas, I was impressed by how quickly it enabled me to analyze datasets that seemed overwhelming at first glance.

Pandas provides two primary data structures—Series and DataFrame—that facilitate handling data in various formats, making it an essential tool for data scientists.

Data Structures in Pandas

- Series: A one-dimensional labeled array capable of holding any data type. Think of it as a column in a spreadsheet.

- DataFrame: A two-dimensional labeled data structure that resembles a table in a database or an Excel sheet. It allows for both row and column labeling.

These structures make data storage and retrieval a walk in the park, making it simple to perform operations across the entire dataset.

Data Manipulation and Analysis

Data manipulation in Pandas is incredibly straightforward, allowing users to perform tasks such as:

- Filtering and selecting: Easily retrieve specific data points or subsets of data.

- Group operations: Use methods like

groupby()to analyze and summarize data. - Merging and joining: Combine different datasets seamlessly using functions like

merge().

By utilizing Pandas, data analysis transforms from a tedious task into an efficient and enjoyable experience, paving the way for deeper insights and informed decision-making.

Scikit-learn Library

Introduction to Scikit-learn

Continuing from the data manipulation capabilities of Pandas, Scikit-learn emerges as a cornerstone library for implementing machine learning algorithms in Python. This library has become my go-to toolkit, especially in projects requiring classification, regression, and clustering. With its simple API and extensive documentation, beginners and experts alike can develop sophisticated models with relative ease.

Scikit-learn not only provides ready-to-use functions but also encourages best practices in model development, making it a vital asset in any data scientist’s repertoire.

Machine Learning Algorithms in Scikit-learn

Scikit-learn encompasses a wide range of algorithms, each suited for different tasks:

- Supervised learning: Algorithms like linear regression, support vector machines, and decision trees.

- Unsupervised learning: Techniques such as k-means clustering and hierarchical clustering.

- Ensemble methods: Boosting and bagging techniques like Random Forest and Gradient Boosting.

With these options, finding the right algorithm for your specific problem becomes manageable.

Model Evaluation and Selection

What’s equally important is evaluating your model’s performance. Scikit-learn offers several techniques to ensure you’re building robust models:

- Cross-validation: Use

cross_val_score()to validate your model’s performance across multiple subsets of data. - Metrics: Functions like

accuracy_score,confusion_matrix, andF1-scoreprovide valuable insight into model efficacy. - Grid Search: With

GridSearchCV, users can systematically explore different hyperparameters to optimize model performance.

By leveraging Scikit-learn’s capabilities, data scientists can refine their models for accuracy and reliability, ultimately enhancing the quality of their predictions.

TensorFlow Library

Introduction to TensorFlow

Following the fundamental algorithms offered by Scikit-learn, TensorFlow stands out as a robust library tailored for deep learning. Initially developed by Google, it provides the tools needed to build complex neural networks efficiently. My first experience with TensorFlow was exhilarating; the ability to construct sophisticated models that could learn from data and improve over time felt like magic.

TensorFlow’s flexibility and scalability make it suitable for both beginners and seasoned data scientists alike, facilitating everything from simple experiments to intricate production-grade models.

Building Neural Networks with TensorFlow

Creating neural networks in TensorFlow can be straightforward, particularly with its high-level Keras API. Some key steps include:

- Defining the architecture: Specify the number of layers and nodes.

- Choosing activation functions: Options like ReLU and sigmoid enhance learning capabilities.

- Compiling the model: Select optimizers and loss functions that align with your objectives.

This structured approach allows even novices to construct effective neural networks rapidly.

Model Training and Deployment

Once your model is built, the next steps involve training and deploying it.

- Model training: Utilize TensorFlow’s

fit()method to train the model on your dataset, tweaking parameters as necessary. - Evaluation: After training, assess model performance using validation data, ensuring it generalizes well.

- Deployment: TensorFlow Serving enables the easy deployment of models, allowing for real-time predictions in various applications.

Harnessing TensorFlow transforms the machine learning process, enabling practical implementations and pushing the boundaries of what’s possible with artificial intelligence.

Keras Library

Introduction to Keras

As we transition from TensorFlow, the Keras library emerges as a user-friendly interface designed for building and training deep learning models. What I find particularly appealing about Keras is its simplicity and efficiency; it abstracts many of the complexities involved in model development while still offering robust capabilities. Originally developed as a high-level API for TensorFlow, Keras streamlines the entire process, making it an excellent choice for both beginners and advanced users.

With Keras, you can quickly prototype and iterate on deep learning models with minimal code, which can significantly speed up your development workflow.

Deep Learning Models in Keras

Building deep learning models in Keras is intuitive. Users can define models using either the Sequential API or the Functional API, depending on their complexity:

- Sequential API: Ideal for simple stacks of layers.

- Functional API: Best for intricate architectures, such as multi-input or multi-output models.

This flexibility allows developers to create various architectures, from feedforward networks to convolutional neural networks.

Image Classification Example

To illustrate Keras’ power, consider an image classification task. By utilizing Keras, setting up a convolutional neural network (CNN) to classify images can be achieved in just a few lines:

- Prepare your data: Load and preprocess the dataset.

- Define the architecture: Stack layers using

Sequential(). - Compile the model: Choose an optimizer, loss function, and metrics.

- Train the model: Use

fit()to train on your dataset.

For example, classifying images of cats and dogs can enhance your understanding of both Keras and deep learning. With just a few simple commands, Keras allows users to dive deep into the fascinating world of neural networks, bridging the gap between theory and practical application.

Matplotlib and Seaborn Libraries

Data Visualization with Matplotlib

As we delve deeper into the analysis of data, visualization becomes an invaluable tool. While TensorFlow and Keras focus on model building, Matplotlib provides the platform for visual exploration and understanding of datasets. My first foray into data visualization was with Matplotlib, where I quickly learned that a well-crafted plot could reveal insights that raw data often obscures.

Matplotlib’s flexibility allows users to create a wide variety of plots, including line graphs, bar charts, and scatter plots, making it a cornerstone for data analysis.

Creating Plots in Matplotlib

Creating basic plots with Matplotlib is straightforward. Here’s a quick overview of the steps involved:

- Import the library: Use

import matplotlib.pyplot as plt. - Prepare your data: Organize your data into x and y variables.

- Create the plot: Use functions like

plt.plot()for line plots orplt.bar()for bar charts. - Customize the plot: Add titles, labels, and legends with simple commands.

- Display the plot: Call

plt.show()to visualize your creation.

These steps quickly turn abstract numbers into vibrant visual stories.

Seaborn for Statistical Data Visualization

For those looking to enhance their visualizations with statistical context, Seaborn comes to the rescue. Built on top of Matplotlib, Seaborn simplifies complex visualizations while providing aesthetically pleasing defaults. Its capabilities include:

- Visualization of distributions: Use

sns.histplot()orsns.kdeplot()for visualizing data distributions. - Categorical variables: Functions like

sns.boxplot()help summarize the distribution of data across categories. - Integration with Pandas: Seaborn works seamlessly with Pandas DataFrames, making it easy to visualize data directly from these structures.

By utilizing Seaborn alongside Matplotlib, users can produce insightful and polished visualizations that serve to communicate findings effectively, transforming the way data is perceived and understood.

NLTK Library

Introduction to NLTK

As we shift our focus towards natural language processing (NLP), the Natural Language Toolkit (NLTK) library emerges as a powerful ally. This Python library offers a comprehensive suite of tools and resources for working with human language data, making it indispensable for anyone venturing into NLP. My introduction to NLTK was transformative; it opened my eyes to the richness of language and how it could be analyzed programmatically.

With a variety of text classification, tokenization, stemming, and tagging capabilities, NLTK serves as a foundation for many NLP tasks, equipping users to manipulate and understand text data.

Natural Language Processing in NLTK

NLTK provides an extensive range of functions that facilitate various NLP processes, including:

- Tokenization: Breaking down text into words or sentences.

- Part-of-speech tagging: Identifying grammatical categories like nouns and verbs.

- Named entity recognition: Recognizing names of people, organizations, and locations.

These components collectively help structure language data and extract meaningful insights from it, making NLTK a versatile tool for linguists and data scientists alike.

Text Analysis and Tokenization

A common use case in NLTK is text analysis, which begins with tokenization. This process involves dividing the text into individual words or sentences, providing a foundation for further analysis.

Here’s a simple way to tokenize text using NLTK:

- Import NLTK: Use

import nltk. - Download necessary resources: Run

nltk.download('punkt')to access tokenization data. - Tokenize your text: Utilize

nltk.word_tokenize()for word tokenization ornltk.sent_tokenize()for sentence tokenization.

The result is a structured array of tokens ready for deeper analysis, including calculating frequency distributions or sentiment analysis. By harnessing NLTK, users can uncover patterns and insights in textual data, laying the groundwork for advanced NLP applications.

XGBoost Library

Introduction to XGBoost

As we explore advanced machine learning techniques, XGBoost emerges as a game-changer in the world of gradient boosting algorithms. Renowned for its speed and performance, it has gained a reputation as a go-to library for many data scientists and Kaggle competitors alike. My first experience with XGBoost was enlightening; within hours, I was transforming high-dimensional datasets into accurate predictive models, all thanks to its optimized architecture and scalability.

XGBoost excels in handling large datasets, making it particularly effective in competitions and real-world applications.

XGBoost for Gradient Boosting

XGBoost implements gradient boosting in a way that enhances flexibility and efficiency. This algorithm incorporates several advantages:

- Handling missing values: Built-in support for missing values ensures model training proceeds without pre-imputation.

- Feature importance: Automatically assesses feature significance, helping users refine their models effectively.

- Regularization: L1 and L2 regularization help prevent overfitting, enhancing model generalization.

These features collectively enable robust handling of complex datasets and deliver state-of-the-art performance.

Hyperparameter Tuning in XGBoost

A critical step in maximizing model performance is hyperparameter tuning. XGBoost offers a plethora of parameters to fine-tune, including:

- Learning rate (

eta): Controls how quickly the model adjusts weights. - Max depth: Limits the depth of trees, balancing complexity and performance.

- Subsample: Proportion of training data used for each iteration, aiding in preventing overfitting.

Employing techniques like Grid Search and Random Search can assist in systematically exploring these hyperparameters. By optimizing your settings, you can significantly improve model accuracy and efficiency, reinforcing XGBoost’s position as a premier choice for predictive modeling in data science.