Introduction

Overview of Neural Networks

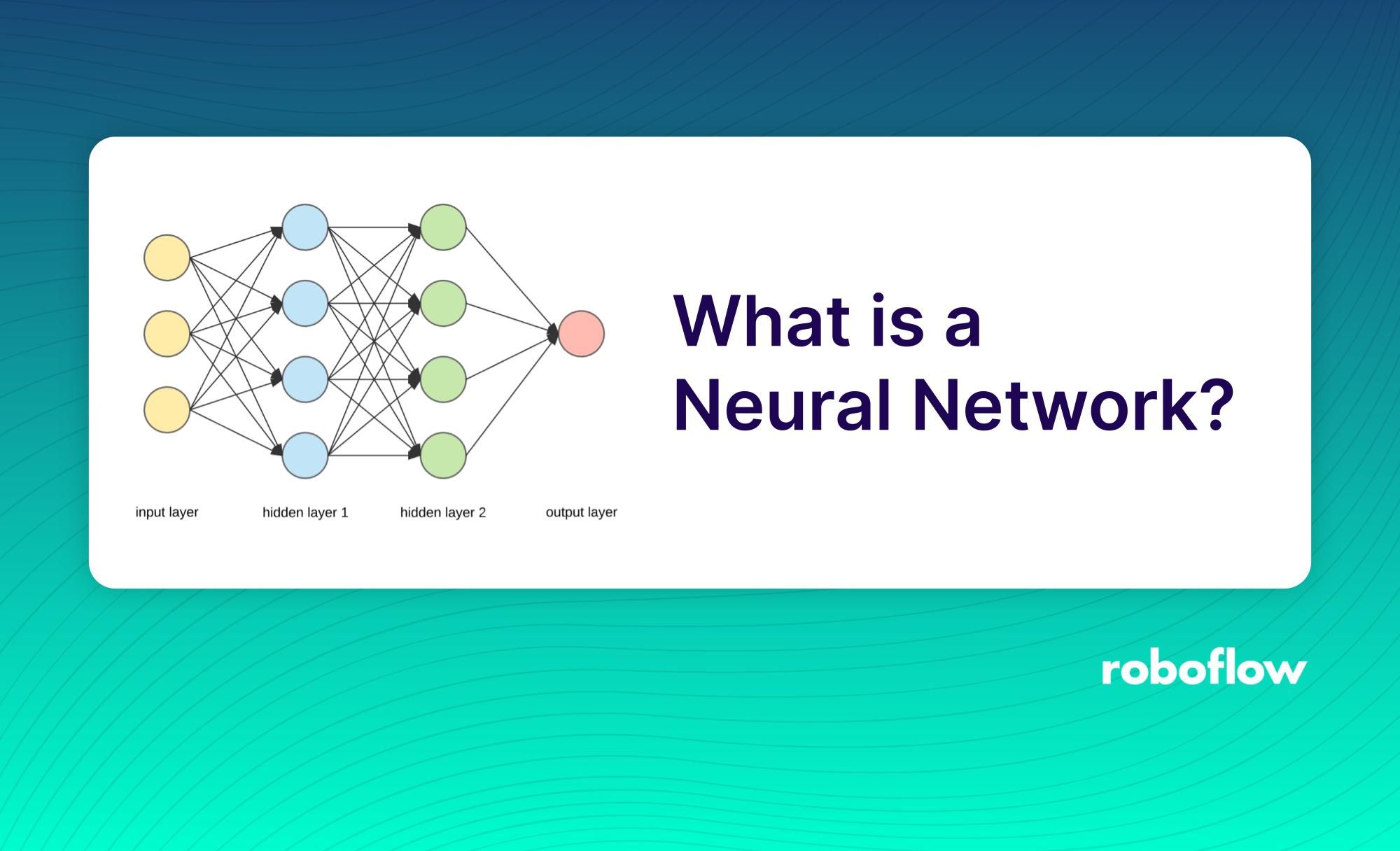

Neural networks are computational models designed to simulate the way the human brain processes information. At their core, they consist of layers of interconnected nodes, termed neurons, that work together to identify patterns in data. Each neuron takes input, applies an activation function, and passes the output to the next layer.

This structure allows neural networks to excel at tasks like image and voice recognition, revolutionizing industries from healthcare to entertainment.

Importance of Neural Networks in Machine Learning

The significance of neural networks in machine learning cannot be overstated. They serve as the backbone of numerous advanced applications, such as:

- Image Recognition: Identifying and classifying objects from images.

- Natural Language Processing: Enabling machines to understand and interpret human language.

- Robotics: Guiding autonomous systems to navigate complex environments.

By mimicking cognitive functions, neural networks empower machines to learn from data, making them crucial for the ongoing evolution of artificial intelligence.

History and Development of Neural Networks

Origins of Neural Networks

The journey of neural networks dates back to the 1940s when researchers like Warren McCulloch and Walter Pitts proposed a model to simulate logical functions in biological neurons. Their groundbreaking work laid the groundwork for modern neural networks.

However, it wasn’t until the 1950s that Frank Rosenblatt introduced the Perceptron, the first significant neural network algorithm, capable of learning from input data.

Milestones in Neural Network Development

The evolution of neural networks has been marked by several key milestones:

- 1960s: Introduction of backpropagation, enabling networks to learn more complex patterns.

- 1980s: The revival of interest with the development of multilayer perceptrons.

- 2012: Breakthrough in deep learning when AlexNet won the ImageNet competition.

These milestones reflect neural networks’ gradual growth into the sophisticated tools shaping machine learning today. Each advancement brings us closer to replicating human-like cognition in machines.

Types of Neural Networks

Feedforward Neural Networks

Feedforward Neural Networks represent the simplest type of artificial neural network architecture. In this structure, information moves in one direction—from input to output—without any cycles. This straightforward design makes them effective for tasks like classification and regression.

Recurrent Neural Networks

Recurrent Neural Networks (RNNs) take a more dynamic approach, capable of processing sequences of data. With their loops, RNNs maintain information from past inputs, which is particularly useful in natural language processing, like predicting the next word in a sentence.

Convolutional Neural Networks

Convolutional Neural Networks (CNNs) excel in image-related tasks. They use convolutional layers to automatically detect features, making them ideal for image recognition, facial detection, and more.

Generative Adversarial Networks

Lastly, Generative Adversarial Networks (GANs) consist of two neural networks—the generator and the discriminator—that work against each other. This innovative setup is brilliant for generating realistic images and video synthesis, showcasing the versatility of neural networks in creative applications. Each type of neural network serves distinct purposes, underscoring their adaptability and power in solving complex problems.

How Neural Networks Work

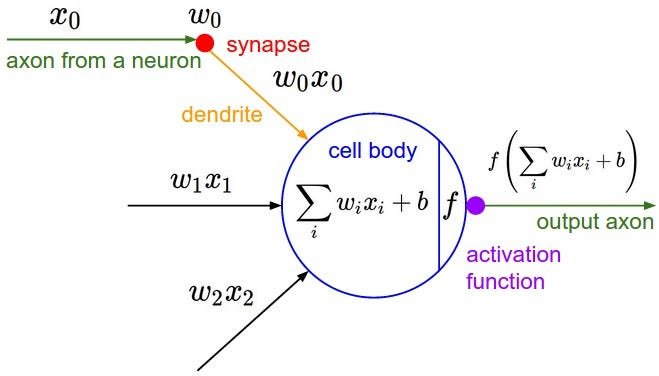

Neurons and Connections

At the heart of neural networks are neurons—simple processing units that mimic biological brain cells. Each neuron receives inputs, processes them, and sends the output to connected neurons. These connections, each with an associated weight, influence how important an input is to the neuron’s output. The sum of inputs feeds into an activation function, determining the neuron’s final output.

Activation Functions

Activation functions play a crucial role in introducing non-linearity into the network. Common functions include:

- Sigmoid: Outputs between 0 and 1, ideal for binary classification.

- ReLU (Rectified Linear Unit): Provides faster convergence and is widely used.

Forward Propagation and Backpropagation

During forward propagation, data flows through the network, generating predictions. To improve accuracy, backpropagation adjusts weights based on the error from predictions versus actual outputs. This two-step process enables neural networks to learn from data, continuously refining their models. Together, these components illustrate the intricate workings of neural networks, making them powerful tools for various applications.

Training Neural Networks

Data Preprocessing

To train neural networks effectively, data preprocessing is essential. This step ensures that the input data is clean and in the right format. Common preprocessing techniques include:

- Normalization: Scaling data to a standard range.

- Encoding: Converting categorical variables into numerical form.

- Splitting: Dividing data into training, validation, and test sets.

By preparing data properly, networks can learn more efficiently and accurately.

Loss Functions

Loss functions measure how well a neural network’s predictions align with actual outcomes. By quantifying this error, they provide feedback for adjustments during training.

Popular loss functions include:

- Mean Squared Error: For regression tasks.

- Cross-Entropy Loss: For classification problems.

Optimizers and Regularization

Finally, optimizers adjust the network’s weights based on feedback from the loss function. Algorithms like Adam and SGD (Stochastic Gradient Descent) improve learning efficiency. Regularization techniques like dropout or L2 regularization help prevent overfitting, ensuring that the model generalizes well to unseen data. Together, these elements are crucial in developing robust neural networks, allowing them to excel in diverse tasks effectively.

Applications of Neural Networks

Image Recognition

One of the most prominent applications of neural networks is in image recognition. Technologies like Convolutional Neural Networks (CNNs) have transformed how machines interpret visual information. For instance, social media platforms automatically tag friends in photos using this technology.

Natural Language Processing

Another exciting area is Natural Language Processing (NLP). Neural networks enable machines to understand and generate human language. Applications range from chatbots providing customer support to advanced translation services that break language barriers.

Autonomous Vehicles

Lastly, neural networks are at the core of autonomous vehicle technology. They analyze data from sensors to recognize obstacles, interpret signals, and make driving decisions. By integrating these functionalities, vehicles can navigate complex environments, showcasing the transformative power of neural networks across various sectors. Each application highlights their versatility and vital role in shaping our future.

Challenges and Limitations

Overfitting and Underfitting

While neural networks offer powerful capabilities, they come with their own set of challenges. A common issue is overfitting, where the model learns to perform exceptionally well on training data but fails to generalize to new data. Alternatively, underfitting occurs when the model is too simplistic and fails to capture underlying patterns. Balancing these can be tricky but is crucial for success.

Interpretability of Neural Networks

Another challenge is the interpretability of neural networks. Many models function as “black boxes,” making it difficult to understand how decisions are made. This lack of transparency can be problematic, especially in fields like healthcare, where understanding the reasoning behind predictions is vital.

Computational Resources

Lastly, training neural networks often requires substantial computational resources. Deep learning models need powerful GPUs and significant memory, which can be a barrier for smaller organizations. Addressing these challenges is essential for harnessing the full potential of neural networks while ensuring they remain practical and accessible for all users.

Future Trends in Neural Networks

Explainable AI

As neural networks continue to evolve, one significant trend is the rise of Explainable AI (XAI). With increasing scrutiny on AI decisions, developers strive to create models that offer insights into their workings. This trend not only enhances user trust but also helps ensure ethical practices in AI usage, especially in sensitive fields like finance and healthcare.

Neuromorphic Computing

Another exciting direction is neuromorphic computing, which mimics the brain’s neural structure. By creating hardware designed for neural network operations, researchers aim to achieve more efficient computation, drastically reducing energy consumption while enhancing processing speed. Personal experience shows that this shift could make advanced AI applications accessible in everyday devices.

Federated Learning

Lastly, federated learning is gaining traction. This technique allows models to learn from decentralized data sources without sharing sensitive information. Businesses can harness collaboration while maintaining data privacy. As these trends unfold, they promise to shape a future where neural networks are more transparent, efficient, and ethical, ultimately enhancing their impact on society.

Conclusion

Recap of Neural Networks

In this exploration of neural networks, we’ve uncovered their fascinating structure, from individual neurons and their connections to the complexity of various architectures. These powerful models have transformed how we approach tasks like image recognition, natural language processing, and more.

Implications for the Future of Machine Learning

Looking ahead, neural networks hold immense promise for the future of machine learning. As trends like explainable AI and federated learning evolve, they will not only enhance the efficiency and transparency of neural networks but also broaden their applications across different sectors. By continuing to address challenges, such as interpretability and resource demands, we can unlock their full potential, paving the way for groundbreaking advancements in technology. Embracing these developments will be essential for leveraging machine learning in everyday life, ensuring it remains a cornerstone of innovation.