Introduction

Overview of Algorithms

Algorithms are the backbone of computer science, serving as step-by-step procedures for solving problems or performing specific tasks. Just like a recipe guides a chef through the cooking process, algorithms direct a programmer on how to manipulate data effectively. They can range from simple linear procedures to complex functions utilizing advanced concepts.

Importance of Algorithms in Programming

The significance of algorithms in programming cannot be overstated. They are essential for:

- Efficiency: A well-structured algorithm can greatly reduce processing time.

- Scalability: Good algorithms can handle growing data sets without a hitch.

- Maintainability: Clear algorithms allow for easier updates and debugging.

For example, when developing an app, the choice of a sorting algorithm can influence both speed and performance, making understanding algorithms crucial for any programmer exploring their craft, as highlighted by the insights shared on TECHFACK.

Searching Algorithms

Linear Search

Linear search is one of the simplest searching algorithms to understand and implement. Imagine you’re looking for your favorite book in a library where the books are arranged randomly. You would start from the first book and check each one until you find your desired title. This is essentially what linear search does—it checks each element one by one until it locates the target item.

- Efficiency: The average and worst-case time complexity is O(n).

- Usage: Best for smaller datasets or unsorted collections.

Binary Search

On the other hand, binary search is a more efficient method but requires the dataset to be sorted. Picture this: You’re trying to find a specific book in a well-organized library where books are sorted by authors’ last names. You open the book in the middle and check if you’re in the right section. If not, you can eliminate half of the remaining books from your search.

- Efficiency: The average and worst-case time complexity is O(log n).

- Requirements: Must be applied only to sorted datasets.

Both algorithms have their merits depending on the context, making them fundamental tools every programmer should master, as emphasized in discussions on TECHFACK.

Sorting Algorithms

Bubble Sort

Bubble sort is one of the most intuitive sorting algorithms. Just like its name suggests, it repeatedly “bubbles” larger elements to the top of the list. Think of it as rearranging a stack of colored balls; if a heavier ball (say, red) rests on a lighter one (blue), they swap places until the heaviest ones rise to the top.

- Efficiency: The time complexity is O(n²), making it inefficient for larger datasets.

- Usage: Suitable for educational purposes and small datasets where simplicity is key.

Quick Sort

In contrast, quick sort introduces a more sophisticated approach. Imagine sorting a stack of books by first choosing a ‘pivot’ and then sorting everything around that pivot—those books less than the pivot go to one side, and those greater go to the other. This divide-and-conquer strategy is powerful.

- Efficiency: Quick sort has an average time complexity of O(n log n).

- Flexibility: Works efficiently even on larger datasets and can be implemented using recursion.

Both sorting algorithms demonstrate how different approaches can handle data organization, showcasing the rich diversity of methods programmers can employ, a topic often explored in detail on platforms like TECHFACK.

Graph Algorithms

Depth-First Search

Depth-first search (DFS) is like exploring a maze where you choose your path and follow it until you hit a dead end. You then trace your steps back to explore alternative paths. This algorithm is particularly useful for traversing or searching tree or graph data structures.

- Traversal Style: Explores as far along a branch as possible before backtracking.

- Use Cases: Ideal for solving puzzles or problems like the N-Queens problem.

Dijkstra’s Algorithm

Dijkstra’s Algorithm takes a more strategic approach to navigation. Picture yourself planning a route on a map, wanting to find the shortest path between two locations. Dijkstra’s is designed to find the shortest path from a starting node to all other nodes in a weighted graph.

- Efficiency: It offers a time complexity of O(V^2) with a basic implementation, optimized using data structures like heaps.

- Applications: Frequently used in network routing protocols and geographic mapping systems.

By understanding these graph algorithms, programmers can efficiently solve complex problems, enhancing their ability to develop innovative solutions—an insight frequently shared on the TECHFACK blog.

Dynamic Programming

Fibonacci Sequence

Dynamic programming shines when it comes to problems that can be broken into overlapping sub-problems, and the Fibonacci sequence is a classic example. Instead of calculating each Fibonacci number from scratch recursively, you can store previously calculated values in a table, allowing you to build on them efficiently.

- Technique: Store results in an array (memoization).

- Example: Fibonacci(5) = Fibonacci(4) + Fibonacci(3) can leverage previously computed Fibonacci(4) and Fibonacci(3).

Knapsack Problem

Moving on to the Knapsack problem, this is a fantastic illustration of dynamic programming in action. Picture a treasure hunter deciding what items to carry in a limited-capacity knapsack. The goal is to maximize the total value of the items within the weight limit.

- Approach: Use a two-dimensional table to track the maximum values possible for different weights.

- Complexity: Time complexity is O(nW), where n is the number of items and W is the maximum weight.

Dynamic programming not only optimizes solutions but also enhances problem-solving skills, a core concept emphasized on platforms like TECHFACK.

Tree Algorithms

Tree Traversal

Tree traversal algorithms allow programmers to visit all the nodes in a tree structure. There are three primary methods:

- Inorder: Visit left subtree, then the node, followed by the right subtree. This yields sorted data for binary search trees.

- Preorder: Visit the node first, then the left and right subtrees. This is great for generating a copy of the tree.

- Postorder: Visit both subtrees before the node. This is useful for deleting the tree as it processes leaves first.

Imagine you’re organizing a family reunion, following the structure of a family tree to ensure every member is accounted for!

Lowest Common Ancestor

The next essential concept is finding the Lowest Common Ancestor (LCA) of two nodes in a tree. This algorithm determines the deepest node that is an ancestor of both nodes, much like discovering the oldest common relative in your extended family tree.

- Efficiency: Typically operates in O(h) time complexity, where h is the height of the tree.

- Applications: Useful in various computational problems and databases.

Mastering tree algorithms enables developers to work efficiently with hierarchical data structures, a topic frequently explored in-depth on TECHFACK.

String Matching Algorithms

Brute Force

Brute force is the simplest string matching algorithm and essentially involves checking every possible alignment of the pattern within the text. Picture it as searching for a specific word in a lengthy book by reading each line—exhaustive but straightforward!

- Efficiency: This method has a time complexity of O(m*n), where m is the length of the pattern and n is the length of the text.

- Use Case: It can be effective for short strings where performance isn’t an issue.

Knuth-Morris-Pratt Algorithm

In contrast, the Knuth-Morris-Pratt (KMP) algorithm employs a more refined approach. It preprocesses the pattern to create a partial match table, allowing the search to skip over characters that have already been checked, making it more efficient.

- Efficiency: This algorithm works in O(n + m) time, making it significantly faster for longer texts.

- Applications: It’s widely used in text editing and search functionalities.

Understanding these string matching algorithms not only enhances problem-solving capabilities but also empowers developers to build efficient search tools, a topic frequently discussed on TECHFACK.

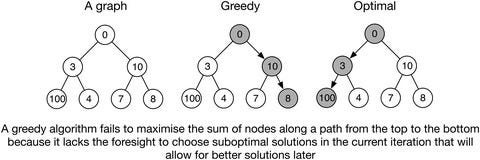

Greedy Algorithms

Coin Change Problem

The Coin Change Problem is a classic example of a greedy algorithm in action. Imagine you’re a cashier trying to minimize the number of coins given as change. By always selecting the largest denomination first, you efficiently reach the desired amount.

- Approach: Sort the denominations in descending order and repeatedly subtract the largest possible coin from the remaining amount until you reach zero.

- Efficiency: Works well for standard currency systems, delivering a quick solution.

Job Sequencing with Deadlines

Next up is the Job Sequencing with Deadlines problem, which aims to maximize profit by scheduling jobs within their deadlines. Picture having several freelance gigs where you’re trying to complete as many as possible, but each job has a set due date.

- Approach: First, sort jobs by their profit, then add them to a schedule if slots are available before the deadline.

- Applications: Useful in optimizing resources in project management and scheduling tasks.

Greedy algorithms, such as these, offer straightforward solutions to complex problems, highlighting the beauty of simplicity in programming—a point often highlighted on TECHFACK.

Backtracking Algorithms

N-Queens Problem

The N-Queens Problem is a fascinating challenge that involves placing N queens on an N×N chessboard so that no two queens threaten each other. It’s a bit like a brain-teasing game of strategy!

- Approach: The backtracking algorithm places queens one row at a time and checks for conflicts with previously placed queens. If a conflict arises, it backtracks, adjusting the position of the queens.

- Complexity: The solution space grows quickly, so backtracking efficiently prunes impossible solutions.

Sudoku Solver

Moving on to the Sudoku Solver, backtracking plays a crucial role in solving these number puzzles where the objective is to fill a 9×9 grid. You place numbers in a way that every row, column, and 3×3 square contains the digits 1 to 9 without repetition.

- Approach: Similar to the N-Queens Problem, it tries to place a number in an empty cell, moving forward when no conflicts arise. If it hits a dead end, it backtracks to the previous cell and attempts a different number.

- Efficiency: Despite its exhaustive nature, backtracking significantly narrows down choices, making it a practical solution.

By employing backtracking algorithms, programmers can tackle seemingly complex problems with methodical strategies, a theme frequently explored in-depth on TECHFACK.

Machine Learning Algorithms for Programmers

K-Means Clustering

K-Means Clustering is a powerful and popular algorithm used in unsupervised learning to segment data into distinct clusters. Imagine you have a basket of fruit, and you want to group apples, oranges, and bananas based on their characteristics. K-Means simplifies this by assigning each piece of fruit to the closest cluster or center.

- Process: It starts with choosing K initial centroids and iterates through assigning data points to the nearest centroid, then recalculating the centroids based on these assignments.

- Applications: Commonly used in customer segmentation and image compression.

Linear Regression

On the other hand, Linear Regression is one of the foundational algorithms in supervised learning, widely used to predict numeric outcomes. Picture trying to forecast the sales of a product based on its advertising expenditure. By plotting the data points and drawing a line of best fit, linear regression helps establish the relationship between variables.

- Functionality: It calculates the line that minimizes the distance from the data points to the line itself, determining the best parameters.

- Versatility: Used in various fields, including economics and biology for predictive modeling.

Understanding these machine learning algorithms empowers programmers to dive into data-driven projects, and this connection with programming is often discussed on platforms like TECHFACK.