Introduction

Defining Transfer Learning in Machine Learning

Transfer learning is a technique in machine learning that allows a model developed for a particular task to be reused as the starting point for a different but related task. This concept is akin to a student who has successfully mastered one subject and uses that knowledge to learn a new yet similar subject more efficiently. For example, if a model trained for cat image recognition can be adapted to recognize dogs, it showcases the power of transfer learning.

Significance of Transfer Learning in ML

The significance of transfer learning extends beyond mere convenience; it revolutionizes the training process. Some of the noteworthy impacts include:

- Time efficiency: Models can be trained in less time by leveraging existing knowledge.

- Enhanced performance: It often leads to improved accuracy, particularly in scenarios with limited data.

- Broader applicability: Transfer learning allows innovations in various fields, from healthcare to autonomous vehicles, with comparatively less effort.

By enabling effective resource utilization, transfer learning is becoming a cornerstone of modern machine learning practices.

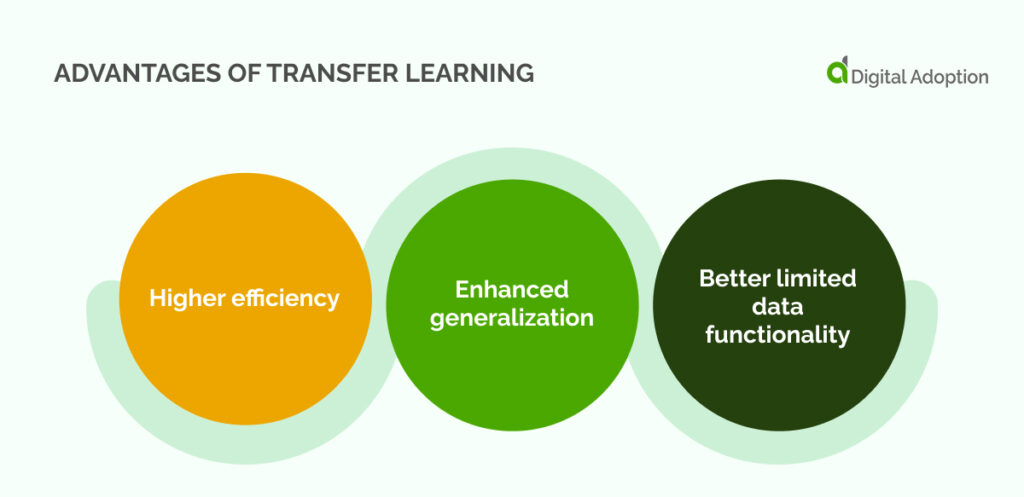

Benefits of Transfer Learning

Accelerated Learning Process

One of the most prominent benefits of transfer learning is the accelerated learning process it provides. By utilizing pre-trained models, developers can drastically reduce the time spent on training. Imagine starting a race not from the starting line but from the halfway mark—this is essentially what transfer learning achieves! It allows practitioners to focus on fine-tuning instead of starting from scratch.

Improved Model Performance

Moreover, transfer learning often leads to enhanced model performance. When models leverage knowledge from related domains, they tend to perform better, especially in tasks where data is scarce. For instance, a model that has learned to identify features in general images will likely excel in recognizing objects in a smaller specialized dataset.

Cost-Efficiency

Finally, the cost-efficiency of transfer learning cannot be overstated. It minimizes the need for vast labeled datasets and extensive computational resources, making it an attractive option for startups and smaller enterprises. Consider these key points:

- Reduced data requirements: Less data collection and labeling translates to lower costs.

- Save computational power: Fewer resources are consumed during model training.

In summary, transfer learning not only accelerates development but also enhances performance while being economically viable, making it a compelling choice for businesses at all levels.

Applications of Transfer Learning

Image Recognition

Building on the benefits outlined earlier, transfer learning has revolutionized various applications, particularly in image recognition. Models like VGG16 and ResNet, which are pre-trained on massive datasets (like ImageNet), can quickly learn to identify specific objects in new images. For example, a medical imaging AI might start with general image features and adjust its understanding to detect tumors.

Natural Language Processing

In natural language processing (NLP), transfer learning has made significant strides with models like BERT and GPT. These models can understand context and subtleties by transferring knowledge from vast corpora of text to specific tasks. This adaptability is like teaching a child language; once they grasp grammar, they can adapt quickly to varied themes and styles.

Recommendation Systems

Lastly, in recommendation systems, transfer learning can enhance user experience by understanding preferences across multiple domains. For instance, a user’s movie preferences can be translated into book recommendations, improving the relevance of suggestions through past insights.

In summary, whether it’s identifying images, understanding language, or personalizing user experiences, transfer learning stands out as a powerful enabler across various industries.

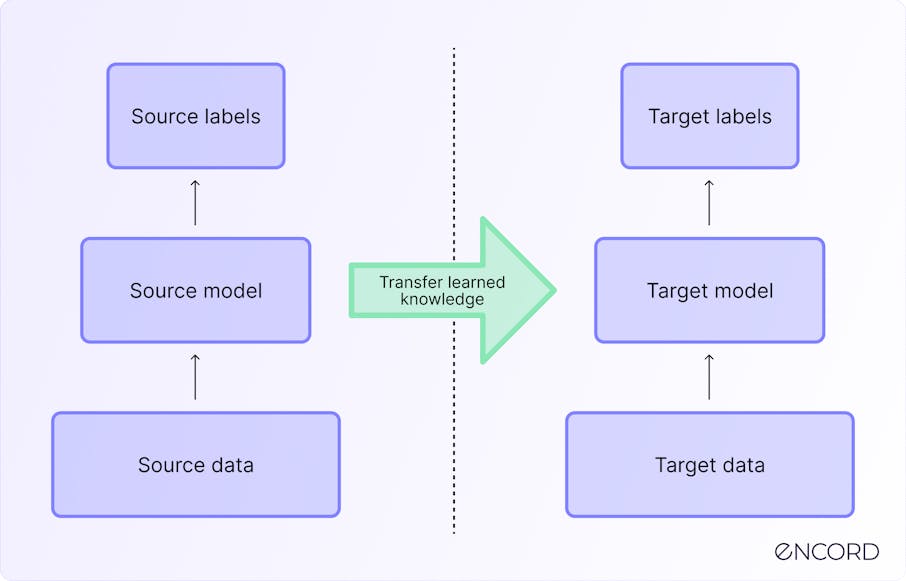

Techniques in Transfer Learning

Feature Extraction

As we delve into the techniques in transfer learning, one of the most widely used is feature extraction. This process involves taking a pre-trained model and using the learned features as inputs for a new task. It’s akin to using a well-crafted toolbox; the tools are ready to help you build something new without starting from scratch. For instance, in image classification, you might employ the convolutional layers of a pre-trained model to capture essential features of your specific images.

Fine-Tuning

Next, we have fine-tuning, which takes this a step further. In this technique, the pre-trained model is not only used for feature extraction; it is also slightly adjusted (or “tuned”) to better align with the new data. This is particularly useful when you have a specific application in mind, like a unique type of product recognition, leading to improved accuracy as the model learns subtle differences in the new dataset.

Domain Adaptation

Finally, domain adaptation is crucial when the source and target domains differ significantly. For example, adapting models trained on synthetic data to real-world applications can be challenging but necessary. Through techniques like re-weighting or augmenting training data, the model can better generalize and perform effectively in the new context.

In summary, understanding these techniques—feature extraction, fine-tuning, and domain adaptation—can empower practitioners to leverage transfer learning effectively across various applications.

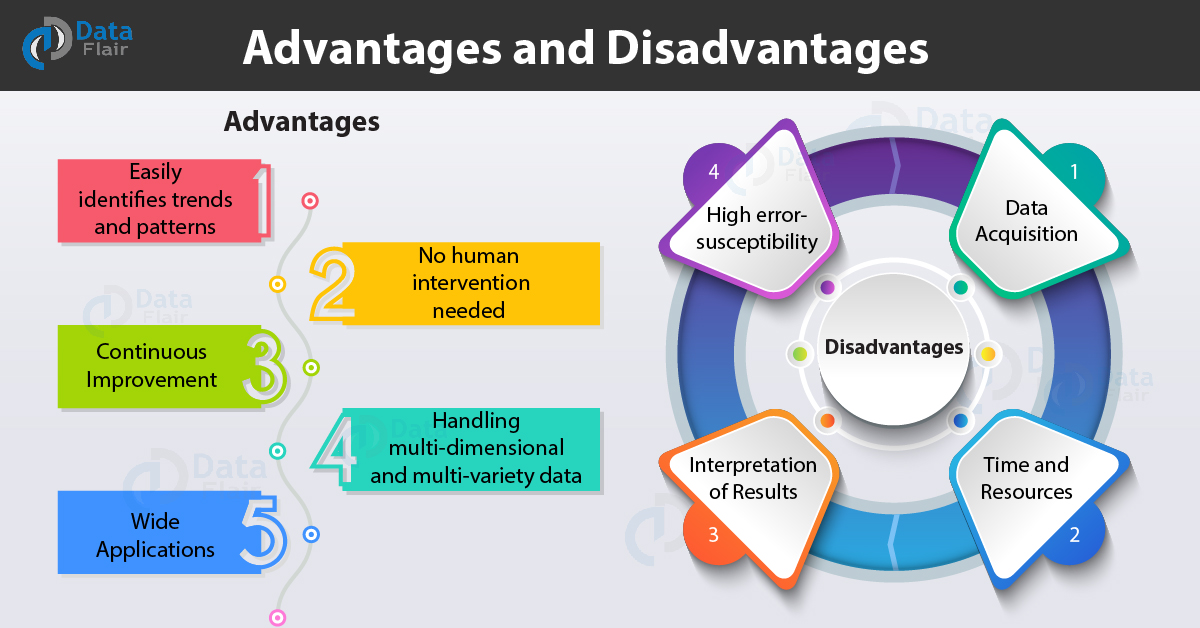

Challenges and Limitations

Lack of Domain Relevance

Despite the benefits of transfer learning, several challenges and limitations must be acknowledged. A primary issue is the lack of domain relevance. When the source and target domains are too different, the learned features may not be applicable. For instance, using a model trained on urban landscapes to interpret rural environments could lead to inaccurate results, much like trying to apply urban traffic rules in a country backyard.

Overfitting Concerns

Another concern is overfitting. While fine-tuning a model can enhance its performance, it can also lead to overfitting, especially if the new dataset is small. This is like a student memorizing answers for a specific test without grasping the underlying concepts. Consequently, the model excels at the training data but struggles with real-world applications.

Data Bias Issues

Lastly, data bias poses a significant challenge. If a pre-trained model is built on biased data, it may perpetuate or even amplify those biases in new applications. This is a critical issue in sensitive areas such as hiring or legal decisions. As machine learning practitioners, it’s crucial to be aware of these limitations to mitigate their impact.

In summary, while transfer learning holds great potential, understanding and addressing these challenges is essential for effective deployment in real-world scenarios.

Case Studies

Transfer Learning in Healthcare

Exploring real-world applications, transfer learning has made notable strides in healthcare. For instance, researchers have adapted models trained on general medical images to identify specific anomalies in radiographs. This is particularly valuable in settings where vast labeled data is scarce. Imagine a rural hospital utilizing a model trained on urban data to accurately diagnose diseases—this could save lives by providing instant, reliable insights.

Transfer Learning in Autonomous Driving

Similarly, in autonomous driving, transfer learning is pivotal in enhancing vehicle perception systems. By using models pre-trained on vast datasets of diverse traffic scenarios, developers can fine-tune these models to recognize unique challenges in different environments, such as city streets versus highways. This adaptability enables vehicles to navigate safely and efficiently, regardless of their operational area.

In summary, these case studies illustrate how transfer learning can bridge knowledge gaps, leading to innovative solutions in challenging fields like healthcare and autonomous driving.

Future Trends and Developments

Advancements in Transfer Learning Research

As we look ahead, the field of transfer learning is poised for significant advancements. Ongoing research is focusing on developing methods that improve model adaptability to different domains. Techniques such as unsupervised learning and meta-learning are gaining traction, allowing models to learn more effectively with minimal labeled data. It’s exciting to envision a future where machines can understand context as well as humans do!

Potential Applications in Various Industries

Moreover, the potential applications of transfer learning are extensive and continue to expand across various industries. For example:

- Finance: Transfer learning can enhance fraud detection systems by adapting models trained on different types of transactions.

- Retail: Customizing product recommendations using knowledge from diverse consumer behaviors.

- Agriculture: Utilizing models trained on satellite imagery to monitor crop health in different climates.

In summary, the future of transfer learning is bright, with promising research and diverse applications that hold the potential to transform industries and enhance efficiency across countless sectors.

Conclusion

Recap of Transfer Learning Benefits

In summary, transfer learning demonstrates remarkable benefits that have catalyzed its adoption across various fields. By providing an accelerated learning process, improved model performance, and cost-efficiency, it serves as a powerful tool for machine learning practitioners. Whether it’s enhancing image recognition or streamlining natural language processing, the advantages of leveraging pre-trained models cannot be overstated.

Insights on Leveraging Transfer Learning in ML Systems

As organizations consider integrating transfer learning into their ML systems, there are a few key insights to keep in mind:

- Select appropriate pre-trained models: Choose models that align closely with the target task to maximize relevance.

- Focus on data quality: Ensure that the new datasets used for fine-tuning are high-quality and representative.

- Monitor performance: Regularly evaluate the model’s performance to adjust strategies as necessary.

By thoughtfully applying these insights, businesses can harness the full potential of transfer learning, paving the way for innovation and efficiency in machine learning solutions.