Introduction

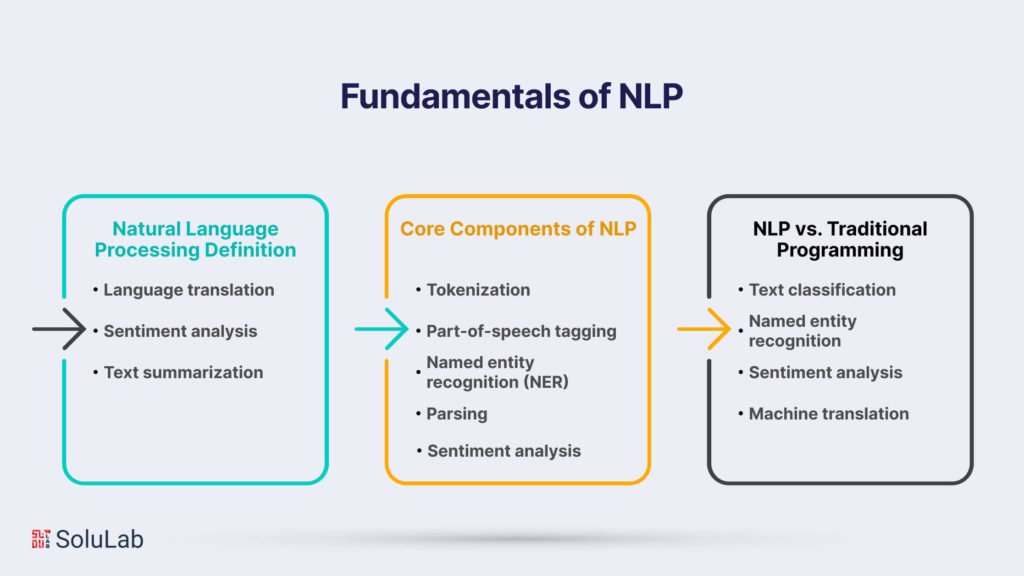

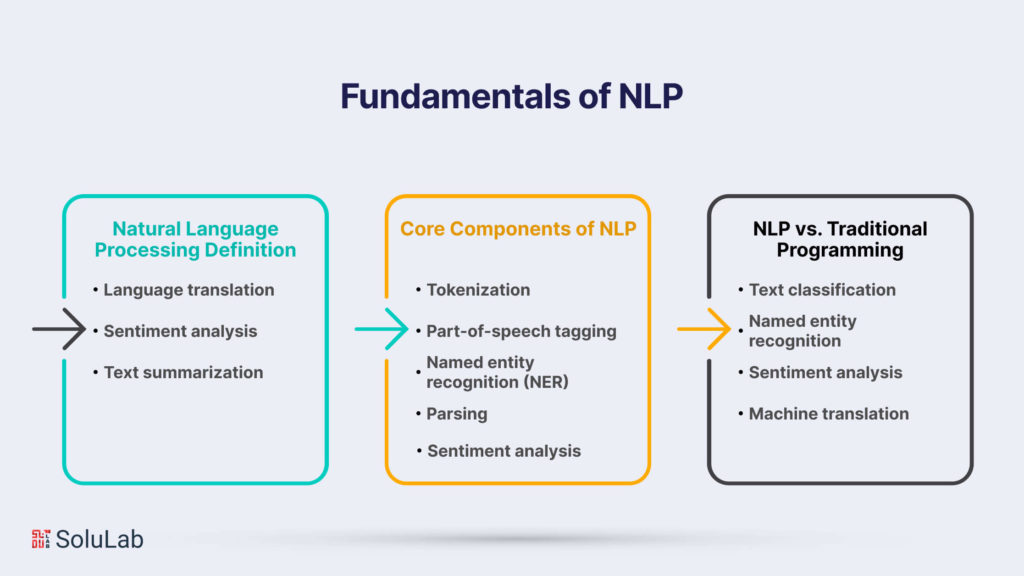

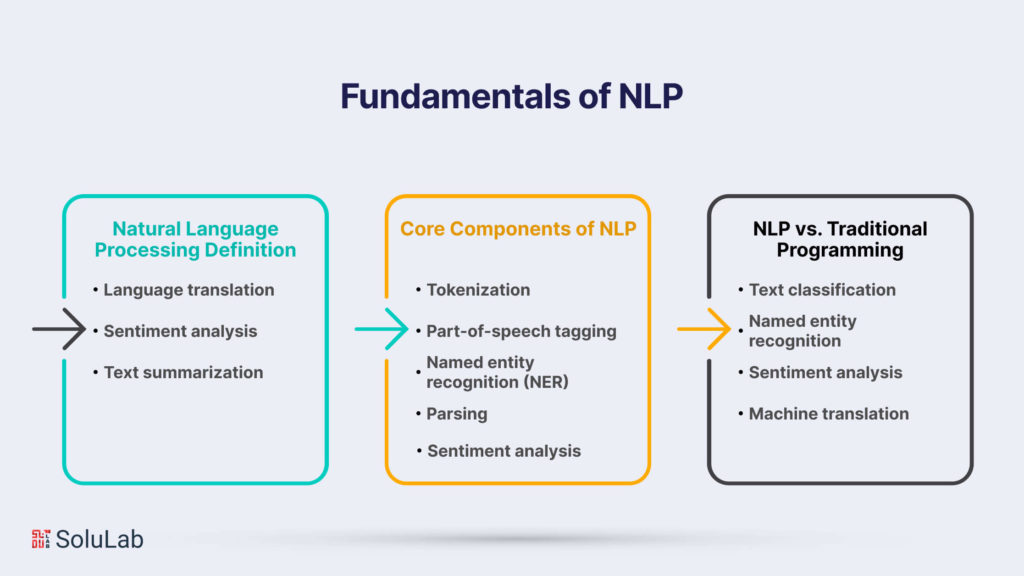

Definition of Natural Language Processing (NLP)

Natural Language Processing (NLP) is a subfield of artificial intelligence focused on the interaction between computers and humans through natural language. It enables machines to read, understand, and generate human language in a way that is both meaningful and contextually relevant. NLP encompasses a range of tasks, from simple text analysis to complex language generation. For example, when you ask a virtual assistant about the weather, NLP is what allows it to comprehend your question and respond accurately.

Role of NLP in Machine Learning

NLP serves as a key component of machine learning by providing machines the ability to learn from human languages and derive insights from textual data. It helps in bridging the gap between unstructured language data and structured machine learning algorithms. Here’s how NLP enriches machine learning:

- Data Preparation: NLP transforms raw text into structured formats, ready for analysis.

- Feature Extraction: It helps in identifying and extracting relevant features from text data, improving model efficiency.

- Enhanced Decision-Making: Incorporating NLP allows models to make informed predictions based on linguistic attributes.

For instance, in sentiment analysis, machine learning models leverage NLP to gauge public opinion, showcasing its significant impact on various industries. By integrating NLP, organizations can unlock valuable insights from vast amounts of textual data, making it an invaluable resource in the realm of machine learning.

Basics of Natural Language Processing

Text Preprocessing Techniques

Diving deeper into NLP, it’s crucial to understand text preprocessing techniques. These methods are essential for preparing raw text data before it can be analyzed effectively. Imagine trying to read a book filled with typos, inconsistencies, and irrelevant information—you’d likely struggle to grasp the message. Similarly, preprocessing cleans and organizes data to ensure clarity and consistency. Here are some common preprocessing techniques:

- Lowercasing: Converts all text to lower case to maintain uniformity, helping to treat “Apple” and “apple” as the same.

- Removing Punctuation: Eliminates unnecessary characters that may confuse analysis.

- Stop Word Removal: Filters out common words like “and,” “the,” and “is” that may not add significant meaning to the context.

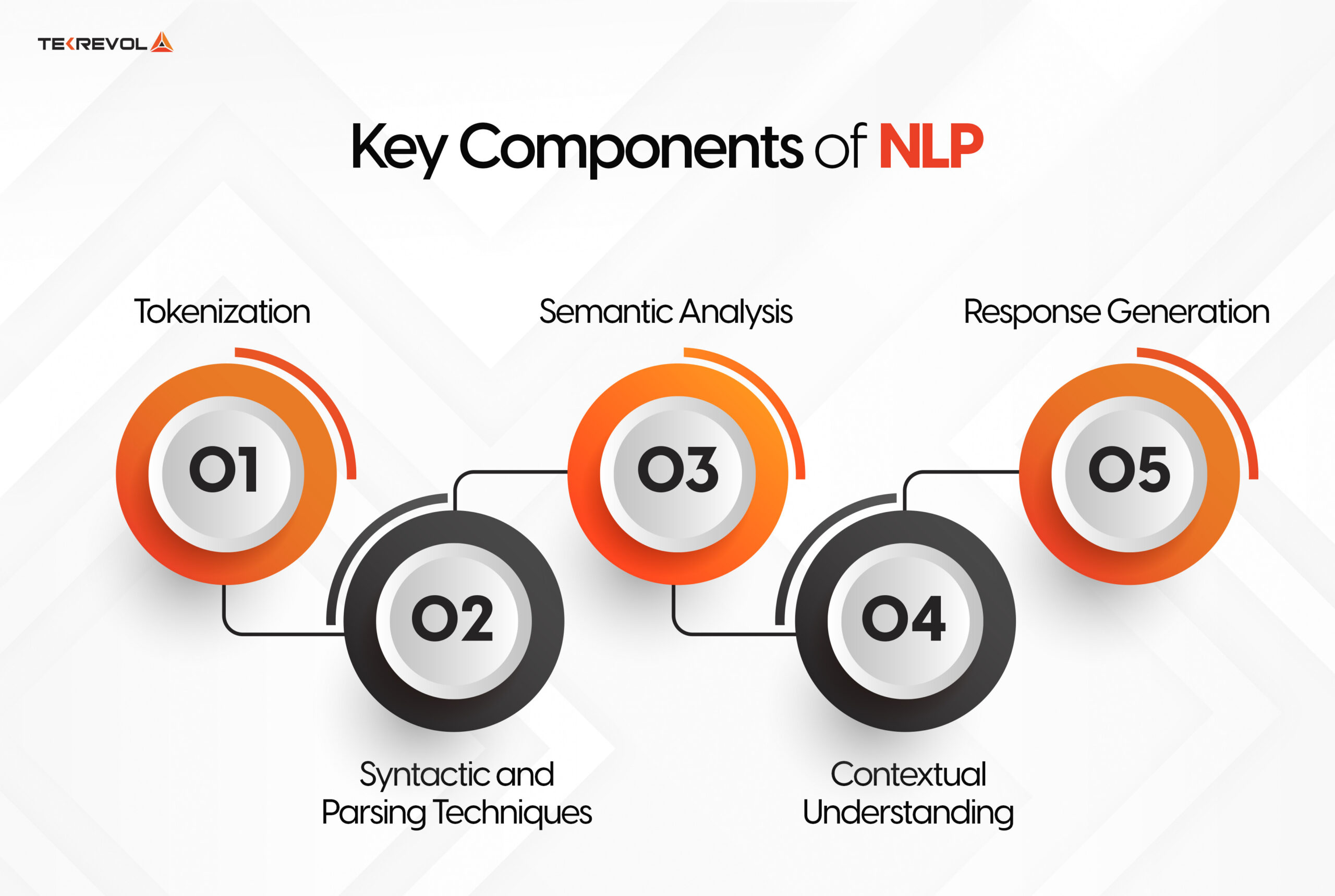

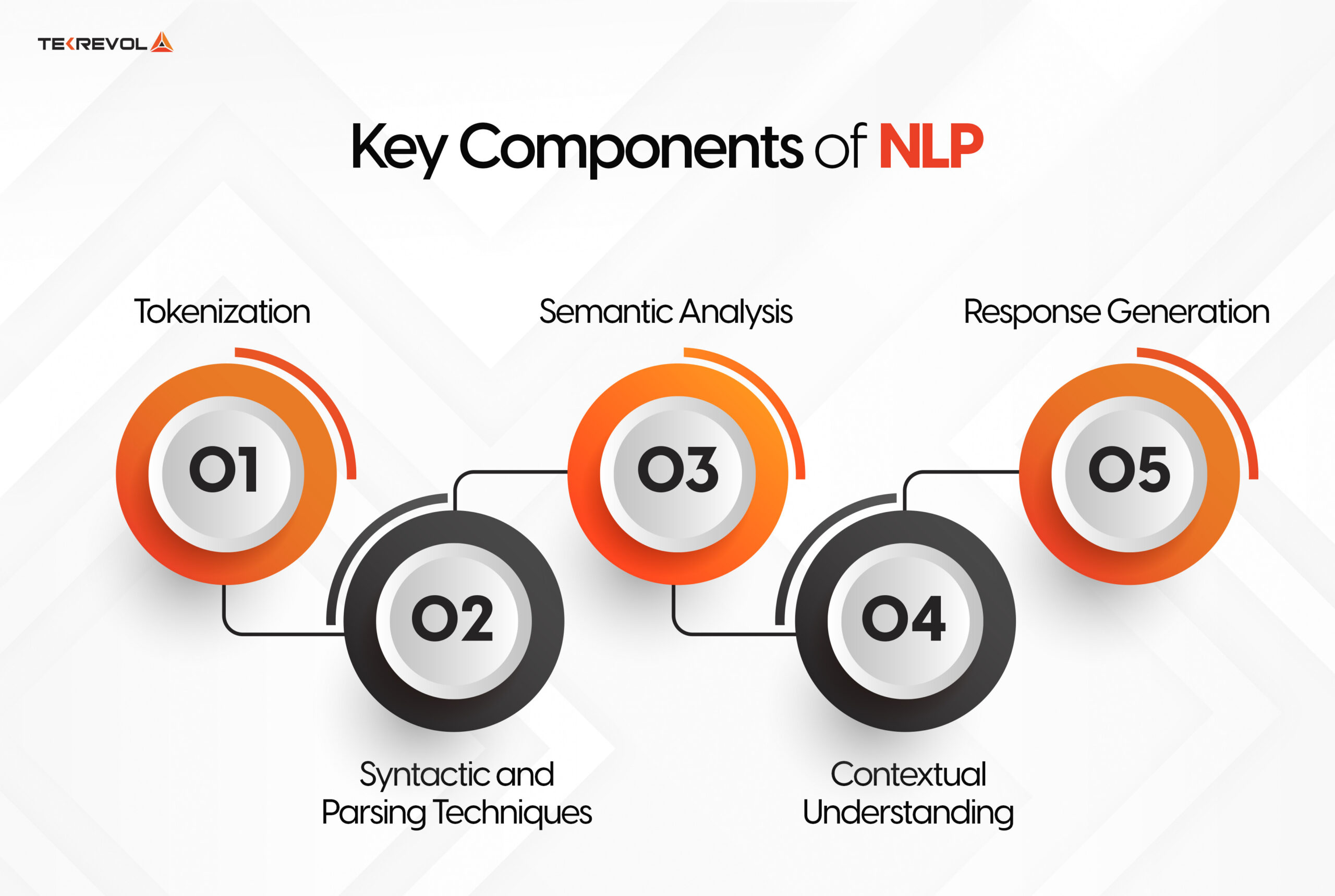

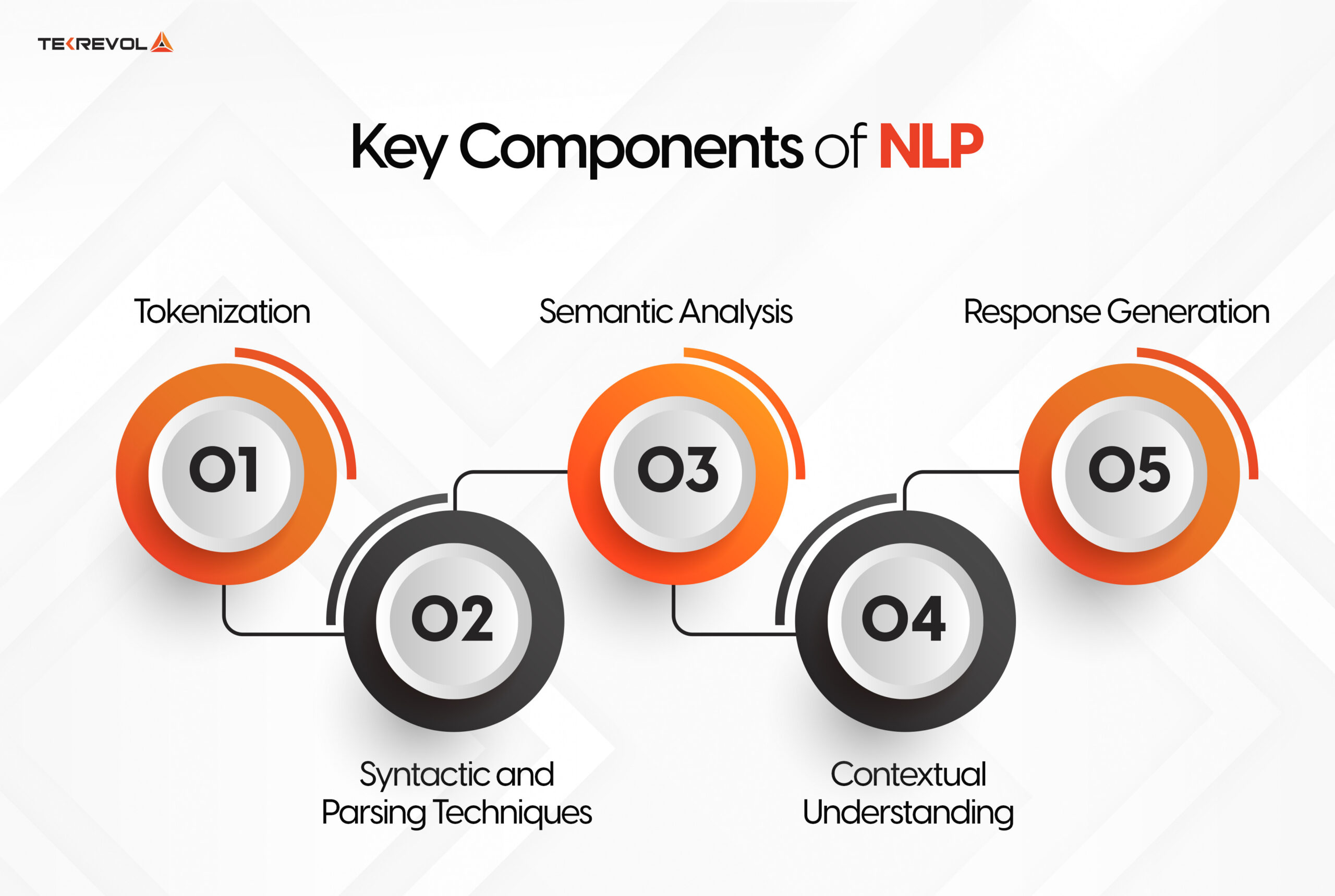

Tokenization and Lemmatization

After preprocessing, two fundamental techniques come into play: tokenization and lemmatization.

- Tokenization: This process splits text into smaller units called tokens—such as words or phrases. For example, the sentence “NLP is fascinating!” becomes the tokens [“NLP”, “is”, “fascinating”].

- Lemmatization: This technique reduces words to their base or root forms, ensuring that different variations are counted as one. For instance, “running” becomes “run.”

These techniques not only streamline analysis but also enhance a machine’s understanding of language, paving the way for more advanced NLP tasks.

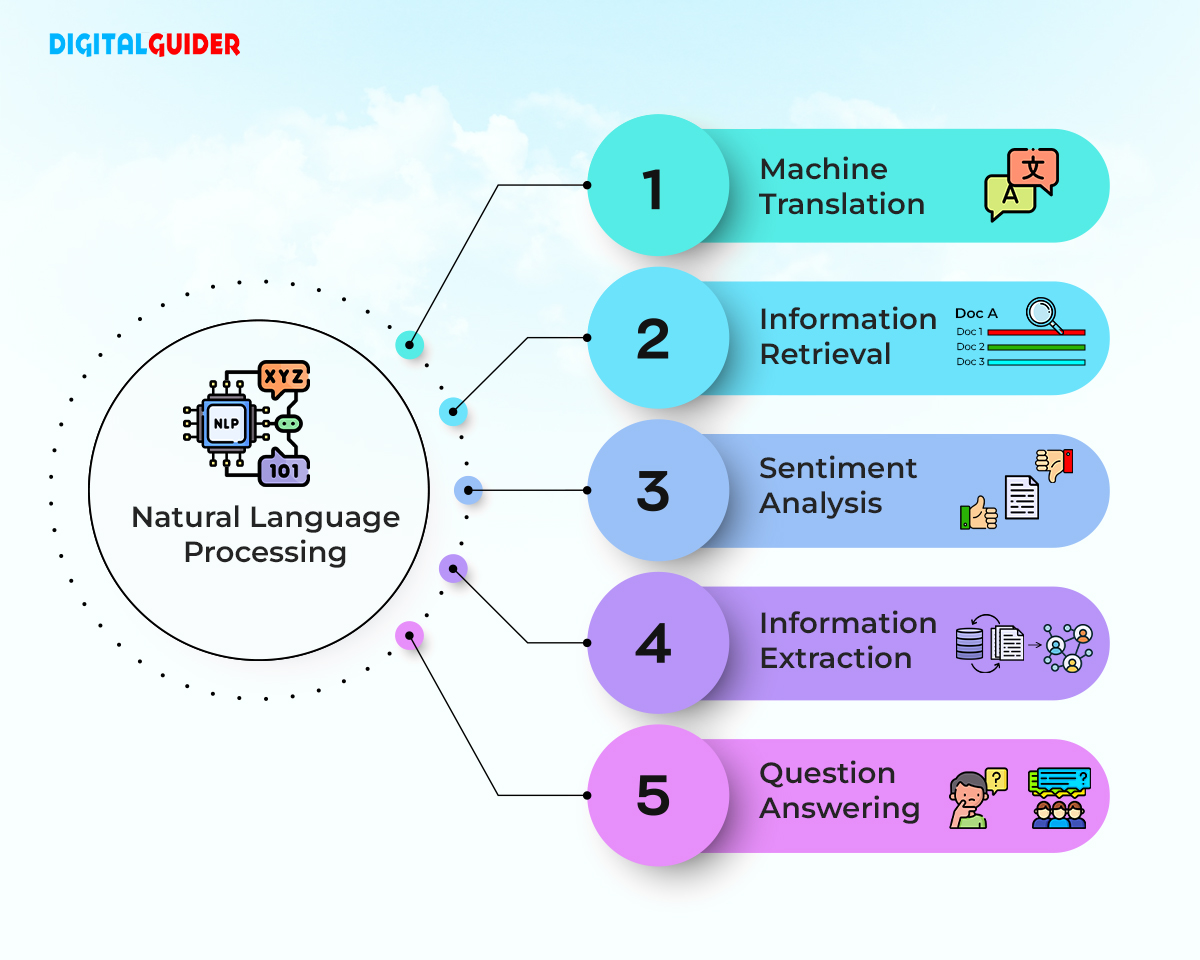

NLP Applications in Machine Learning

Sentiment Analysis

Continuing our exploration of NLP, one of the most impactful applications in machine learning is sentiment analysis. This technique allows businesses to gauge public opinion on their products, services, or even brand reputation. For instance, imagine a popular café that wants to know how customers feel about its new menu items. By analyzing reviews and social media posts, sentiment analysis can determine whether the feedback is positive, negative, or neutral.

Key components of sentiment analysis include:

- Text Classification: Assigning a sentiment label to a snippet of text.

- Polarity Detection: Measuring the strength of sentiment, such as “love” being more positive than “like.”

Named Entity Recognition

Another fascinating application of NLP in machine learning is Named Entity Recognition (NER). This technique identifies and categorizes key entities in text, such as people, organizations, and locations. For example, in the sentence “Apple is headquartered in Cupertino,” NER would detect “Apple” as an organization and “Cupertino” as a location.

Benefits of NER include:

- Automating Information Extraction: Streamlining data collection from vast sources like news articles or reports.

- Enhancing Search Functions: Improving the capability of search engines to provide relevant results based on recognized entities.

Together, sentiment analysis and named entity recognition demonstrate how NLP can transform unstructured text into valuable insights, driving better decision-making and enhancing user experiences.

Advanced NLP Techniques

Word Embeddings

As we delve into more advanced NLP techniques, one standout method is word embeddings. This approach transforms words into numerical vectors, allowing machines to understand the context and relationships between words in a more nuanced way. For instance, the words “king” and “queen” may be close together in vector space, reflecting their similar contexts as royalty.

Key benefits of word embeddings include:

- Semantic Similarity: Capturing the meaning of words based on their context rather than just their spelling.

- Dimensionality Reduction: Reducing the complexity of textual data, making it easier for models to process.

Popular models like Word2Vec and GloVe have popularized this technique, creating richer representations of language.

Sequence-to-Sequence Models

Another groundbreaking advancement in NLP is the sequence-to-sequence (Seq2Seq) model. This architecture is particularly effective for tasks involving translation and summarization. Simply put, Seq2Seq transforms one sequence of text into another. For example, converting a sentence in English to its French equivalent.

Benefits of Seq2Seq models include:

- Handling Variable Input/Output Lengths: Unlike traditional models, Seq2Seq can adapt to different lengths of input and output, making it highly versatile.

- Attention Mechanisms: With the addition of attention, models can focus on different parts of the input sentence for more accurate translations.

Together, word embeddings and Seq2Seq models represent powerful tools in modern NLP, enhancing machines’ ability to comprehend and generate human-like text.

Challenges and Future Trends in NLP

Overcoming Language Barriers

As we explore the challenges and future trends in NLP, one significant hurdle remains overcoming language barriers. With thousands of languages and dialects around the world, creating a unified NLP model that can effectively understand and translate multiple languages presents a formidable task. For instance, while some models excel in resource-rich languages like English and Spanish, they may struggle with less common languages.

To address these challenges, developers are focusing on:

- Cross-Lingual Models: Training models that can operate across multiple languages simultaneously.

- Data Augmentation: Increasing the availability of annotated data for underrepresented languages.

These advancements not only help in translations but also enhance cultural understanding and accessibility.

Integrating NLP with Deep Learning

The future of NLP is also closely intertwined with deep learning technologies. As research progresses, integrating NLP with deep learning methods promises to yield more sophisticated models capable of nuanced understanding and generation of language.

Key developments in this area include:

- Transformer Architectures: These have revolutionized NLP by enabling better context capture and parallel processing.

- Pretrained Language Models: Models like BERT and GPT-3 are setting new benchmarks for tasks like chatbots and content generation.

By harnessing the power of deep learning, NLP can evolve into a more profound tool for understanding human communication, opening up exciting possibilities for businesses and consumers alike.

Case Studies

NLP in Virtual Assistants

Exploring the applications of NLP, one of the most prominent examples is its integration into virtual assistants like Amazon’s Alexa, Apple’s Siri, and Google Assistant. These virtual companions rely heavily on NLP to interpret user commands and provide meaningful responses. For instance, when a user asks, “What’s the weather today?” NLP allows the assistant to understand the context and fetch relevant data quickly.

The effectiveness of NLP in virtual assistants can be attributed to:

- Natural Language Understanding (NLU): This enables the assistant to process and comprehend user intent.

- Dialogue Management: NLP helps manage ongoing conversations, allowing for more natural interactions.

These capabilities enhance user experience, making technology feel more intuitive and user-friendly.

NLP in Language Translation

Another impactful case study is the use of NLP in language translation services, such as Google Translate. This tool utilizes advanced NLP algorithms to provide translations in real-time, helping remove language barriers across the globe.

Key elements include:

- Neural Machine Translation (NMT): This approach leverages deep learning to produce more fluent translations by considering entire sentences rather than isolated words.

- Contextual Understanding: Improving the accuracy of translations by maintaining the context throughout sentences.

By implementing NLP in these areas, organizations can facilitate smooth communication and enhance global collaboration, truly transforming how we interact across languages.

Ethical Considerations in NLP

Bias and Fairness

As we navigate the landscape of NLP, it’s crucial to address the ethical considerations surrounding bias and fairness. NLP models are often trained on large datasets that may inadvertently reflect societal biases, leading to skewed outputs. For example, if a model is trained predominantly on texts that portray a specific demographic positively, it might perpetuate stereotypes while neglecting others.

To combat these biases, it’s essential to focus on:

- Diverse Training Data: Ensuring that datasets are representative of all demographics.

- Bias Detection Algorithms: Implementing tools to identify and mitigate bias in model outputs.

These efforts are vital for creating more equitable AI systems that treat all users fairly and justly.

Privacy Concerns

Privacy concerns also loom large in the realm of NLP, particularly as applications gather and analyze vast amounts of personal data. Take virtual assistants, for instance; while they provide convenience, they also record personal interactions which can raise significant privacy issues.

To enhance privacy protection, organizations should consider:

- Data Anonymization: Removing personally identifiable information from datasets.

- User Consent: Ensuring transparency and obtaining consent before data usage.

By proactively addressing these ethical considerations, the NLP community can develop technologies that respect user rights while promoting a more responsible AI ecosystem.

Conclusion and Future Outlook

Impact of NLP on Machine Learning

In conclusion, Natural Language Processing (NLP) has made a profound impact on the field of machine learning, revolutionizing how machines understand and interact with human language. The integration of NLP enhances various applications, from chatbots that provide customer support to sophisticated translation services that connect people worldwide.

The ability to glean insights from vast text datasets enables organizations to harness the power of machine learning for:

- Enhanced User Experience: Personalized recommendations based on user feedback.

- Improved Data Analytics: Transforming unstructured data into valuable business insights.

As NLP technology evolves, it continues to bridge gaps in communication and understanding, significantly shaping the future landscape of AI.

Areas for Further Research

Looking ahead, several key areas warrant further research to optimize NLP’s potential:

- Reducing Bias: Developing methods to minimize biases in language models, ensuring fairer outcomes for all users.

- Multilingual Models: Creating more robust systems that can seamlessly operate across multiple languages.

By focusing on these areas, researchers and practitioners can unlock even greater capabilities for NLP, making it an indispensable tool in machine learning and broader AI applications. The future is bright, and the journey is just beginning.